Pixels of Tomorrow is business founded by

Guillaume Abadie, former Graphics Programmer at Epic Games, with 8 years of experience and track of record in Unreal Engine pushing the computer graphics' boundary. Guillaume is most well known and recognised for being the author of Unreal Engine's

Temporal-Super-Resolution and Depth of Field, that has shipped in multiple games.

Most notable projects worked on.

Decembre 2023: Lego Fortnite

Renderer profiling and optimization on PS5 & XSX

General GPU profiling and optimization on PS5 & XSX,

improvements of Temporal-Super-Resolution,

TODO

GDC 2023: Fortnite Editor

TSR asset compatibility between Fortnite and vanilla UE5

Improvements of Temporal-Super-Resolution to make Fortnite work on vanilla UE5 TSR's settings, ensuring full asset compatibility and expected behavior between UE5 and Fortnite Editor,

TODO

2022: Fortnite Chapter 4 at 60hz

Temporal-Super-Resolution at 1.6ms on PS5 & XSX

Rewrite/optimization of Temporal-Super-Resolution for 60hz,

1.6ms 1080p -> 4k on PS5 & XSX.

TODO

December 2021: The Matrix Awakens on PS5 & XSX

Temporal-Super-Resolution shipping at 30hz

First shipping of Temporal-Super-Resolution prototype,

3.2ms 1080p -> 4k on PS5 & XSX.

TODO

2021: Unreal Engine 5.0 Early Access

Temporal-Super-Resolution prototype

After our UE5 annoucement

TODO

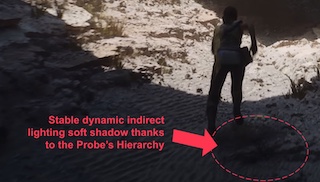

2020: Lumen in the land of Nanite, 5.0 annoucement

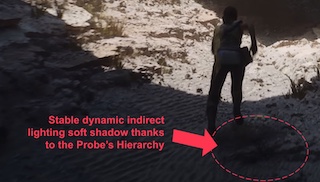

Noise reduction for Lumen using a probe hierachy

Screen space denoising aproaches for global illumination just doesn't work: there needed to be a lot of ray sharing, but it meant a lot of blur, even on high frequency normal nanite geometry tends to have. Plus more sharing, meant larger screen space kernel, with more sample, that was also very costly. There is also challenge of high density object like bushes where a screen space aproach doesn't stand a chance to succeed.

2020: Lumen in the land of Nanite, 5.0 annoucement

Noise reduction for Lumen using a probe hierachy

Screen space denoising aproaches for global illumination just doesn't work: there needed to be a lot of ray sharing, but it meant a lot of blur, even on high frequency normal nanite geometry tends to have. Plus more sharing, meant larger screen space kernel, with more sample, that was also very costly. There is also challenge of high density object like bushes where a screen space aproach doesn't stand a chance to succeed.

The fundamental idea there was simply to do this ray intersection sharing, before the resolve with the gbuffer's surfaces, where probes are great for this, but needed to be where the gbuffer is, hence the idea of placing the probes automatically on the fly from the depth buffer. This has the avantage to work with any type of geometry: static meshes, animated skeletal meshes, opaque GPU particles...

One avantage is that the stocastic probe occlusion testing ray are very short: only up to the probe. So they don't trash the GPU cache to much as they stay very local in the neighborhood of the shaded pixel. But the probes' rays are shot in more structured and coherent directions, very easy to group directions of different probes together to trace the scene in a memory coeherent way too to make most of the GPU's hierrachical caches.

But this technology went on further: the noise in a signal is dependent on the probability to find objects in the scene. But the global illumination's nature of need to sample almost all directions. This means an object probability has an inverse square fall of based of its distance from shading. This translates to a bright object could cast indirect lighting without noise if closeby, but be noisy noise when further away.

To counter this phenomena, need to increase amount of ray sharing to compensate that noise, but linearily to how far an object is then become impraticable in terms of performance.

This is where the probes where actually sharing more distant rays in a hierarchycal way: Probes of hierarchy level N add M rays, terminating to the parent probes at hierarchy N+1 that was had 4 times more rays.

This is where the probes where actually sharing more distant rays in a hierarchycal way: Probes of hierarchy level N add M rays, terminating to the parent probes at hierarchy N+1 that was had 4 times more rays.

This is more distant rays, but this distant rays being in 2 times larger probes, means it is increased in number of rays is actually compensated by being shared across a wider surface to shade. So in the end the number rays shot at different distance remains exactly consistent across the screen.

An object is too far and is causing noise, instability? Sure no problem, just add another level in the hierachy, the number of rays shot ends up identical, only the extra probe placement that end up cheaper cheaper as the larger probes are fewer, and the compositing cost between one parent probe into child probe that is also very cheap because very memory coeherent texture compositing.

We ended up shipping the demo on PS5 with 4 levels in the probe hierarchy, to be completely stable and still be able to reliably cast dynamic soft indirect shadows of the character on the environement.

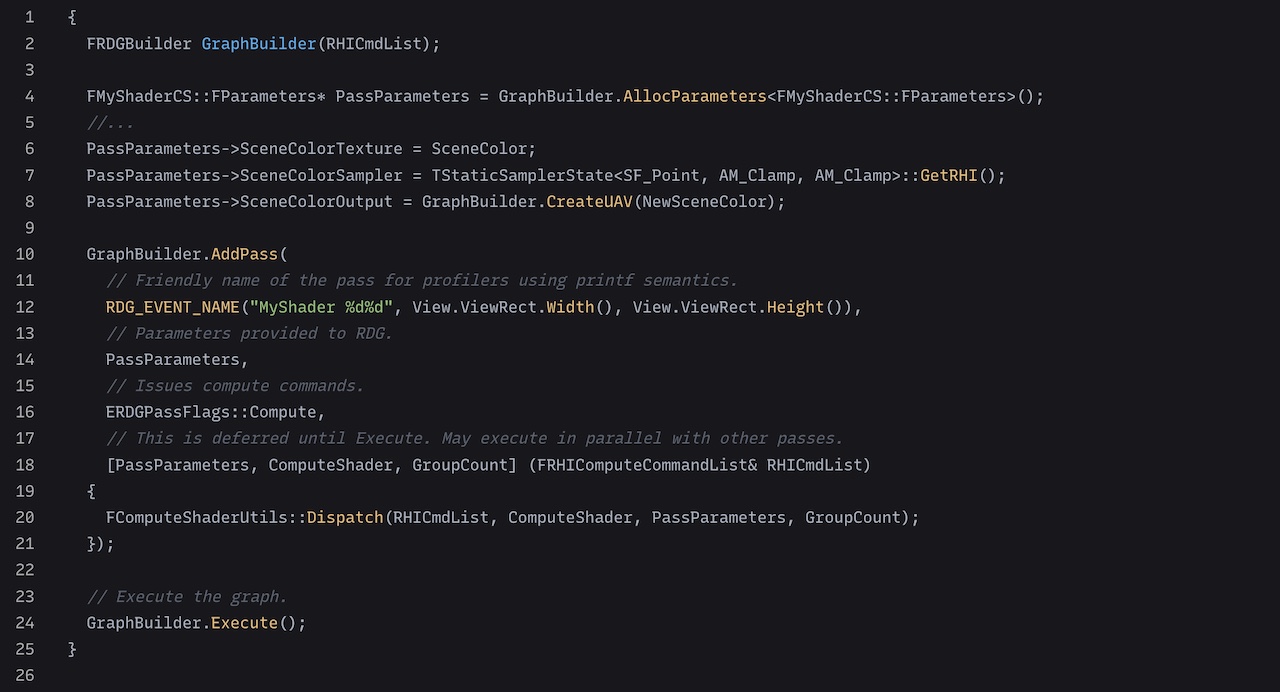

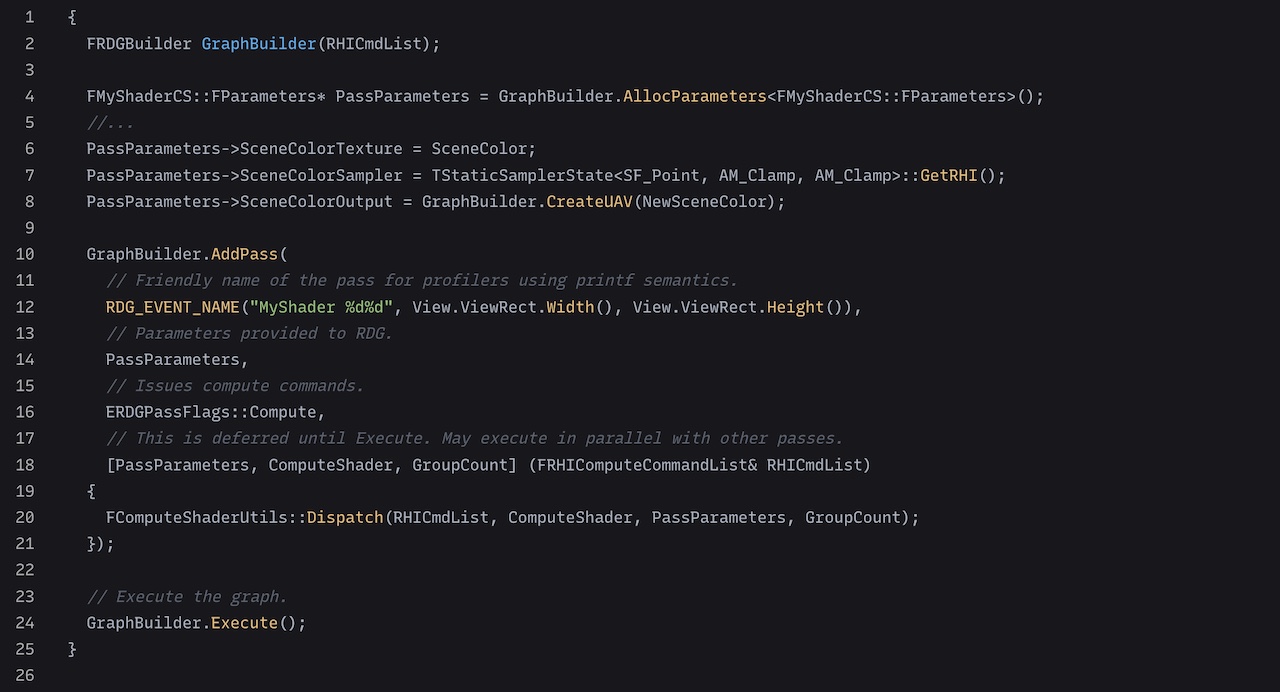

April 2019: Rendering Dependency Graph in UE 4.22

Design of the user facing API from user perspective as this is the graphics programmer's primary tool

Most of UE4's renderer was direct implementation over the RHI. Manually allocating ref counter resource from a render target pools. In addition the shader parameter binding was also very error prone. And with the arrival of D2D12 and the resource transitions, these had to be done manually and adding even more bugs in the many codepaths of the renderer.

Credit's from Martin Mittring's vision, UE4 already had pass based render graph early named the post process graph. But it was only used in the post processing chain of the renderer. Meanwhile, other engines where already adopting en a render graph aproach in production for their entire renderer.

Thanks to the Fortnite's success and possibility to increase the rendering team size, and also with the start on the UE5 secret effort, it was clear we needed to properly invest in a render graph for improved work efficiency and code maintenant of the renderer. The post processing graph was a great first step from which we learned some very nice lessons to built a new one.

Being such a central every-day tool of graphics programmer's daily live, having absolutely all the renderer's code base, we had to make sure the design was as robust and nice to work with as possible. Therefore the design was a team effort composed of Marcus Wassmer, Brian Karis, Daniel Wright, Arne Schnober and myself. Many prototypes were made, to bring on different ideas and merge them together in a converged tool API we would all agree to commit to.

Some keys axes where decided:

- Recognise that the API should be addapted to the renderer's needs, not the otherway arround, since the amount of the renderer code was, is and always will be larger than the RDG's implementation details;

- Recognise that the API is the daily tool of graphics programmers and as such it must be a tool as easy as possible to work with, so that these users can focus on complexity of the actual rendering algorithms UE5 was targetting to offer rather than the complexity of the API itself. That also means reducing boiler plate, and catch common mistake early as possible through vigorous validation to minimise debuging time using it.

A lot of designs considered state-of-the-art at the time were already published in the literature to just adobt, but I'm very proud that we have recognised as a team what we wanted to see different and executed nether-the-less for a refreshing aproach. I still consider RDG's ease of use as an API today as an not enought advertised but essential backbone behind UE5's graphics fidelity success.

GDC 2019: Troll by Goodbye Kansas

UE 4.22's denoisers for reflections, shadows & ambient occlusion

Direct-X's DXR ray-tracing API was coming online, Unreal had to get on the train to develope it's own ray-tracing & denoising technology for enterprise uses cases, and hopeful shipping in games too. Coming with TAAU experience and gathering algorithm experience with DOF, I was trusted to implement Unreal's screen space denoiser for shadows, ambient occlusion & reflections.

GDC 2019: Troll by Goodbye Kansas

UE 4.22's denoisers for reflections, shadows & ambient occlusion

Direct-X's DXR ray-tracing API was coming online, Unreal had to get on the train to develope it's own ray-tracing & denoising technology for enterprise uses cases, and hopeful shipping in games too. Coming with TAAU experience and gathering algorithm experience with DOF, I was trusted to implement Unreal's screen space denoiser for shadows, ambient occlusion & reflections.

The simplest denoisers to starts from is the shadow denoiser. From a surface to be shaded, rays are shot towards area light, in a cone fashion. But a challenge it creates is this cone shape tracing crates soft and noisy shadows from far shadowing object, but sharper shadow and less noisy from closer shadowing object.

A problem is that the distance of a shadow caster is completely arbitrary in the scene, and we can end up with a mix of soft and sharp shadows. We don't want to overblur the sharp shadows, but we want to reduce noise on soft shadows. Lukily this is exactly what happens with DOF's descending ring bucketting for the background, and so using this algorithm instead of classic weighted average solved this in between complexity.

Lighting artistry 101 is that a scene is often lit with a three point lighting: a key light, a fill light, and a rim/back light. So to save performance, the shadow denoiser where actually capable of denoise up to 4 shadow at once of different light source at the same time, to share some of texture fetches and bilateral costs across the multiple lights.

For ambient occlusion, it's pretty much the same as a shadow, except the rays are shot in cosine-distributed hemisphere. So the ambient-occlusion denoiser also used the descending ring bucketting algorithm with the ray hit distance too to not overblur ambient-occlusion contacts in geometric details.

While the shadow's penumbra only depends on the distance to occluder and the light dimensions, the reflection denoiser is a bit different: it depends on the BRDF of the surface, which depends on the surface property, but most importantly, it also of where the incident view V is coming from. This single difference make the ray's hit-distance no longer enough for bilateral rejection.

This simple difference makes the screen-space bluring radius for reflections behave analogically to DOF's circle of confusion. The surface properties that controls the BRDF are analogous to the Lens settings, and the shading surface's distance is analogous to the lens' focus distance. So the reflection denoiser don't use a ray hit distance, but based of a circle of confusion computation that depends both of shading distance from camera, shading material properties and ray-hit distance.

Goodbye Kansas' blog

Youtube

GDC 2018: Starwars Reflection Demo

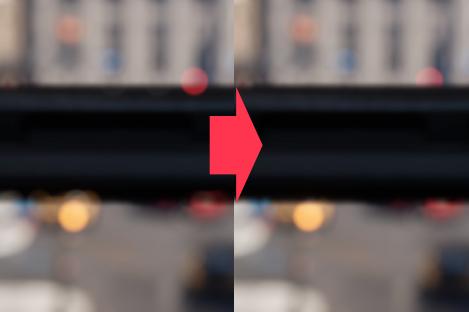

UE 4.20's Cinematic Depth-of-Field

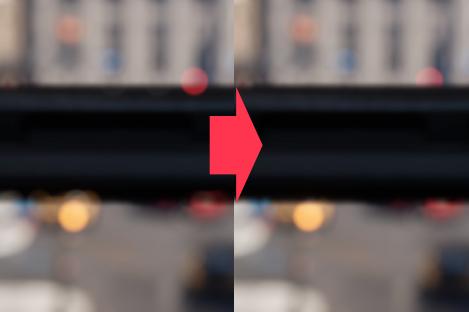

With Nvidia's advances in ray-tracing technology wanting to show case, UE4's depth of field was becoming a bottleneck for image quality for a bettr cinematic look of the renderer. Experienced with real-time image processing with TAAU, and compositing and PCSS shadow filtering with The Human Race project, I took the challenge to implement a brand new DOF algorithm.

GDC 2018: Starwars Reflection Demo

UE 4.20's Cinematic Depth-of-Field

With Nvidia's advances in ray-tracing technology wanting to show case, UE4's depth of field was becoming a bottleneck for image quality for a bettr cinematic look of the renderer. Experienced with real-time image processing with TAAU, and compositing and PCSS shadow filtering with The Human Race project, I took the challenge to implement a brand new DOF algorithm.

Gathering algorithms have the nice advantage to be cheap, and be scalable in performance as they get wider kernel thanks to mip chain. But there was still some existing challenges in the state-of-the-art to produce plausible occlusion between far background and closer background. This is where a great breakthrough was made: starting from the furthest away first samples as a cheap pre-sort further to closer object. Coupling with ring based accumulation possibly occluding the largest own allow to converge to what is the Descending-Ring-Bucketing algorithm (or DRB): what I consider my first greatest invention in computer graphics.

Gathering algorithms have the nice advantage to be cheap, and be scalable in performance as they get wider kernel thanks to mip chain. But there was still some existing challenges in the state-of-the-art to produce plausible occlusion between far background and closer background. This is where a great breakthrough was made: starting from the furthest away first samples as a cheap pre-sort further to closer object. Coupling with ring based accumulation possibly occluding the largest own allow to converge to what is the Descending-Ring-Bucketing algorithm (or DRB): what I consider my first greatest invention in computer graphics.

But scattering algorithms are awesome too to ensure clear, sampling artifact-free bokeh. Trying to hybrid in the past was attempted, but with occlusion order artifacts still. But lukily this is previous experience on shadow filtering and attempt on The Human Race to implement variance shadow maps, actually came in: built a view frustum aligned variance shadow map, but of the CoC in the gathering kernels, and apply this shadow term to the scattered bokeh.

But scattering algorithms are awesome too to ensure clear, sampling artifact-free bokeh. Trying to hybrid in the past was attempted, but with occlusion order artifacts still. But lukily this is previous experience on shadow filtering and attempt on The Human Race to implement variance shadow maps, actually came in: built a view frustum aligned variance shadow map, but of the CoC in the gathering kernels, and apply this shadow term to the scattered bokeh.

This was used in the Starwars raytracing tech demo. Presented at SIGGRAPH 2018 as A Life of a Bokeh.

This was used in the Starwars raytracing tech demo. Presented at SIGGRAPH 2018 as A Life of a Bokeh.

A Life of a Bokeh (PPTX | 153MB)

FX Guide

Youtube

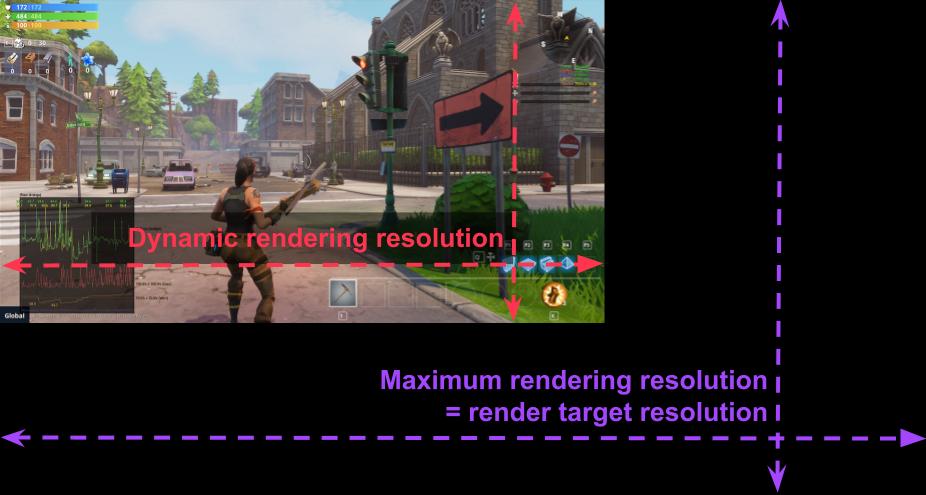

March 2018: Fortnite Battle Royal Season 3

Solid GPU 60hz thanks to dynamic resolution + TAAU

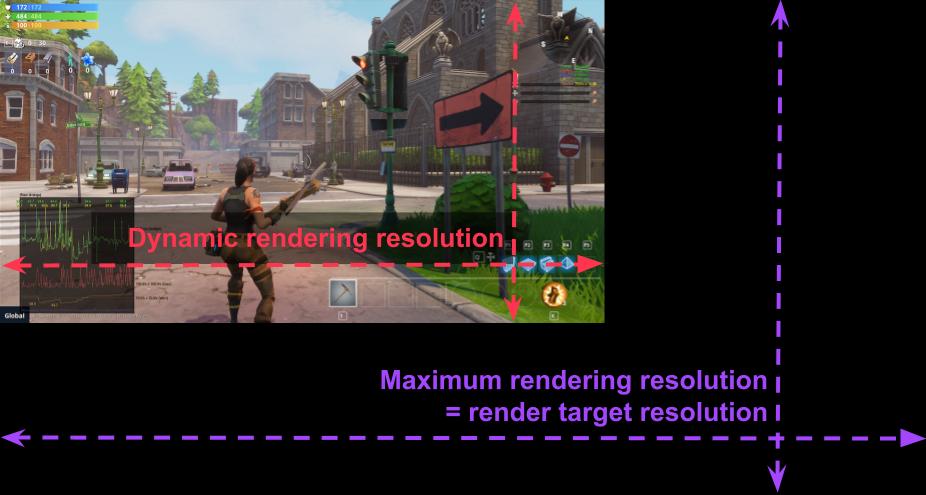

Fortnite Battle Royal release was starting to get a lot of success among gamers, but the game was still offerring on base PS4 and Xbox One either a 30hz game mode, or uncapped frame rate that couldn't maintain a solid 60hz. Due to the competitive nature of the shooting game, having a solid 60hz mode became a company priority to satisfy these gamers.

March 2018: Fortnite Battle Royal Season 3

Solid GPU 60hz thanks to dynamic resolution + TAAU

Fortnite Battle Royal release was starting to get a lot of success among gamers, but the game was still offerring on base PS4 and Xbox One either a 30hz game mode, or uncapped frame rate that couldn't maintain a solid 60hz. Due to the competitive nature of the shooting game, having a solid 60hz mode became a company priority to satisfy these gamers.

While many engine and gameplay programmers worked collectively in a company-wide effort to improve CPU performance to reach 60hz, Ben Woodhouse - Console Lead - at the time was alone on the profiling and renderer configuration for the GPU side and pushing for GPU timing automated testing. But one thing was missing in Unreal Engine but other game engine had and is great tool for GPU performance: dynamic resolution. Especially For Honor from Ubisoft had just shipped using a orizontal dynamic resolution along with a TAA capable to upscaling too: TAAU. Graphic team still small at the time, I was trusted to implement these two.

Our dynamic resolution and TAAU implementation diverged over Ubisoft's in key several ways to support Fortnite need on console, but also dynamic resolution on PC for Oculus VR as Epic Games just had shipped Robo-Recall.

First, instead of the 3 upscaling factors supported using specifically optimised shader permutations, we went for arbitrary upscaling factors for thinner grain dynamic resolution. Our reasoning there was while the TAAU shader might be a little bit slower with upscaling from LDS supporting any upscale, we would still be to render at slightly higher resolutions. Also, TAAU being temporal accumulator, it meant we could change the resolution a lot more often than previously done in other engines using a spatial upscaler that was more visible to the player. We also did introduced the dynamically varying Temporal-AA jitter sequence length for maintain number of scene sample per display pixel to avoid introduce artifact on the converged image.

No descriptor reallocation on rendering resolution changes: first because we wanted arbitrary dynamic resolution upscale factors, which meant too many descriptor. Also our explicit render-target pool based renderer was complex, and allocating descriptor up front to avoid CPU hitches was not feasible. PC on D3D11 also did not support transient memory allocation yet, or doing it on the fly on console on the GPU's time-line was simply too costly. Instead we went for static render target size, and simply resize the viewport in them.

No descriptor reallocation on rendering resolution changes: first because we wanted arbitrary dynamic resolution upscale factors, which meant too many descriptor. Also our explicit render-target pool based renderer was complex, and allocating descriptor up front to avoid CPU hitches was not feasible. PC on D3D11 also did not support transient memory allocation yet, or doing it on the fly on console on the GPU's time-line was simply too costly. Instead we went for static render target size, and simply resize the viewport in them.

Already the renderer was doing this for the editor viewports that could be of different resolution at the artists' own discretion, but also for VR that actually the two eye views in the same render target for stereo rendering. Suffice to say there was still many renderer's algorithms that were sampling outside the viewport which meant, unrendered garbage pixels artifacts creaping in on the edge of the screen. But we decided to bite the bullet at go fixing all these many bugs as this was covering our dynamic resolution, but also these other VR and editor viewports uses cases too. There hasn't been a year without discovering such problem after in some internally yet unused renderer code path. The last graphic artifact we fixed before going gold for the Matrix Awakens on for UE 5.0 was such problem in at the time a ~6 years old code path: SSR on translucent materials used on the cars' windshields.

In this oportunity, Brian Karis, author of UE4's TAA had brained dumped all his knowledge on the tricks, features & failed experiments on TAA to understand and improve. And while TAAU's limitations nowdays is being criticised in terms of quality compared to more modern upscalers, it is worth underlining that 90% of the engineering time I spend on this task ended up:

- Render thread proofing of the dynamicaly changing rendering resolution for quicker resolution changes when the GPU going over-budget;

- TAA being in the middle of Unreal's post processing chain, this meant major changes to half of post processing algorithms to support this resolution change;

- Hunting down and fixing all renderer's algorithms used by Fortnite sampling uninitialized pixels on screen edges;

- Optimising the more complex TAAU than TAA to run as fast as possible on base XB1 and PS4.

And what we ended up, is production ready tighly coupled dynamic resolution + TAAU duo for Unreal Engine's futur games, capable of changing resolution as frequently as every 8 frames maxing out the GPU as much as possible (at 60fps, that is changing resolution at an unprecendented 7 times per seconds), detecting and reacting to 2 consecutive over-budget frames with a response lattency at minimum: the rendering pipeline. Unreal Engine's dynamic resolution heuristic has shipped many games on console remains identical today at the time of writing of UE 5.4, 6 years later.

Unreal Community

Eurogamer

Forbes

And what we ended up, is production ready tighly coupled dynamic resolution + TAAU duo for Unreal Engine's futur games, capable of changing resolution as frequently as every 8 frames maxing out the GPU as much as possible (at 60fps, that is changing resolution at an unprecendented 7 times per seconds), detecting and reacting to 2 consecutive over-budget frames with a response lattency at minimum: the rendering pipeline. Unreal Engine's dynamic resolution heuristic has shipped many games on console remains identical today at the time of writing of UE 5.4, 6 years later.

Unreal Community

Eurogamer

Forbes

GDC 2017: The Human Race

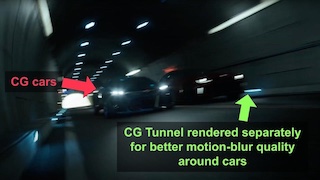

On GPU real-time movie-quality compositing pipeline

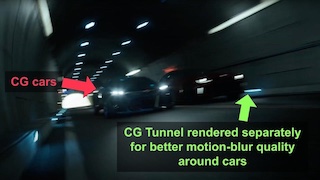

Yearly tradion at Epic Games in the UE4 years, Kim Libreri, Epic Games CTO pushed another graphic boundary pushing demo. This time it was to innovate in virtual production use cases using a game engine, to do live 24hz in-engine compositing of a quality worthy of his previous experience compositing the Matrix Movies and movies he made after.

GDC 2017: The Human Race

On GPU real-time movie-quality compositing pipeline

Yearly tradion at Epic Games in the UE4 years, Kim Libreri, Epic Games CTO pushed another graphic boundary pushing demo. This time it was to innovate in virtual production use cases using a game engine, to do live 24hz in-engine compositing of a quality worthy of his previous experience compositing the Matrix Movies and movies he made after.

The project was in collaboration with The-Mill that was responsible of shooting the live raw footage and IBL on the actual canyon road, and undistorting the 1080p plate's EXR to be streamed raw to the GPU, and set dressing the unreal engine. Marcus Wasmer, now-days Unreal Engine Vice-President of Unreal Engine, had reimplemented from scratch a EXR streamer from the first PCI-express hard drives at the time, to the GPU.

Brian Karis had made and optimised the fully Image-based-Lighting GPU-processing for lighting the car on the GPU with baked spherical-gaussian directional occlusion. He also had implemented a rotational motion-blur for the wheel as a translucent cylinder object arround the wheel sampling the scene color.

Brian Karis had made and optimised the fully Image-based-Lighting GPU-processing for lighting the car on the GPU with baked spherical-gaussian directional occlusion. He also had implemented a rotational motion-blur for the wheel as a translucent cylinder object arround the wheel sampling the scene color.

My role was from this uncompressed video plate, and animated CG scene in Unreal, to hardness the renderer in new inovative ways at the time to implement the full compositing on GPU:

- To render the CG cars with virtual shadows of environement to be casted on the car;

- virtual shadows of virual environement and car to be casted on the plate a RGB mate;

- rendering a soft-shadow RGB matt to apply on the plate using and improved Percentage-Closer-Shadow-Sampling (PCSS) style shadowing algorithm;

- Screen-Space-Reflection (SSR) of the raw plate on the car to better integrate the car in the plate;

- Integration of brand new FFT bloom authored by David Hill for nice specular hit on the car;

- Implementation of light-wrap effect to have bloom of the plate over the car;

- Lens distortion reapplied to match exactly the lens-distortion calibration of the camera The-Mill used on set.

The compositing pipeline had different configurations selected a on per-shot basis to achieve different compositing goals. The outdoor sequence with video streaming, whether there was virtual softshadow to apply, car entering the tunnel entrance, the tunnel sequence where the car and tunnel were rendered separately for best motion blur quality of the tunnel behind the cars. Sometimes the backplate and alpha channel used for different reasons acoording to the compositing pipeline settings.

The compositing pipeline had different configurations selected a on per-shot basis to achieve different compositing goals. The outdoor sequence with video streaming, whether there was virtual softshadow to apply, car entering the tunnel entrance, the tunnel sequence where the car and tunnel were rendered separately for best motion blur quality of the tunnel behind the cars. Sometimes the backplate and alpha channel used for different reasons acoording to the compositing pipeline settings.

Our team ended winning the SIGGRAPH 2017's real-time live award.

2020: Lumen in the land of Nanite, 5.0 annoucement

Noise reduction for Lumen using a probe hierachy

Screen space denoising aproaches for global illumination just doesn't work: there needed to be a lot of ray sharing, but it meant a lot of blur, even on high frequency normal nanite geometry tends to have. Plus more sharing, meant larger screen space kernel, with more sample, that was also very costly. There is also challenge of high density object like bushes where a screen space aproach doesn't stand a chance to succeed.

2020: Lumen in the land of Nanite, 5.0 annoucement

Noise reduction for Lumen using a probe hierachy

Screen space denoising aproaches for global illumination just doesn't work: there needed to be a lot of ray sharing, but it meant a lot of blur, even on high frequency normal nanite geometry tends to have. Plus more sharing, meant larger screen space kernel, with more sample, that was also very costly. There is also challenge of high density object like bushes where a screen space aproach doesn't stand a chance to succeed.

GDC 2019: Troll by Goodbye Kansas

UE 4.22's denoisers for reflections, shadows & ambient occlusion

Direct-X's DXR ray-tracing API was coming online, Unreal had to get on the train to develope it's own ray-tracing & denoising technology for enterprise uses cases, and hopeful shipping in games too. Coming with TAAU experience and gathering algorithm experience with DOF, I was trusted to implement Unreal's screen space denoiser for shadows, ambient occlusion & reflections.

GDC 2019: Troll by Goodbye Kansas

UE 4.22's denoisers for reflections, shadows & ambient occlusion

Direct-X's DXR ray-tracing API was coming online, Unreal had to get on the train to develope it's own ray-tracing & denoising technology for enterprise uses cases, and hopeful shipping in games too. Coming with TAAU experience and gathering algorithm experience with DOF, I was trusted to implement Unreal's screen space denoiser for shadows, ambient occlusion & reflections.

GDC 2018: Starwars Reflection Demo

UE 4.20's Cinematic Depth-of-Field

With Nvidia's advances in ray-tracing technology wanting to show case, UE4's depth of field was becoming a bottleneck for image quality for a bettr cinematic look of the renderer. Experienced with real-time image processing with TAAU, and compositing and PCSS shadow filtering with The Human Race project, I took the challenge to implement a brand new DOF algorithm.

GDC 2018: Starwars Reflection Demo

UE 4.20's Cinematic Depth-of-Field

With Nvidia's advances in ray-tracing technology wanting to show case, UE4's depth of field was becoming a bottleneck for image quality for a bettr cinematic look of the renderer. Experienced with real-time image processing with TAAU, and compositing and PCSS shadow filtering with The Human Race project, I took the challenge to implement a brand new DOF algorithm.

Gathering algorithms have the nice advantage to be cheap, and be scalable in performance as they get wider kernel thanks to mip chain. But there was still some existing challenges in the state-of-the-art to produce plausible occlusion between far background and closer background. This is where a great breakthrough was made: starting from the furthest away first samples as a cheap pre-sort further to closer object. Coupling with ring based accumulation possibly occluding the largest own allow to converge to what is the Descending-Ring-Bucketing algorithm (or DRB): what I consider my first greatest invention in computer graphics.

Gathering algorithms have the nice advantage to be cheap, and be scalable in performance as they get wider kernel thanks to mip chain. But there was still some existing challenges in the state-of-the-art to produce plausible occlusion between far background and closer background. This is where a great breakthrough was made: starting from the furthest away first samples as a cheap pre-sort further to closer object. Coupling with ring based accumulation possibly occluding the largest own allow to converge to what is the Descending-Ring-Bucketing algorithm (or DRB): what I consider my first greatest invention in computer graphics.

But scattering algorithms are awesome too to ensure clear, sampling artifact-free bokeh. Trying to hybrid in the past was attempted, but with occlusion order artifacts still. But lukily this is previous experience on shadow filtering and attempt on The Human Race to implement variance shadow maps, actually came in: built a view frustum aligned variance shadow map, but of the CoC in the gathering kernels, and apply this shadow term to the scattered bokeh.

But scattering algorithms are awesome too to ensure clear, sampling artifact-free bokeh. Trying to hybrid in the past was attempted, but with occlusion order artifacts still. But lukily this is previous experience on shadow filtering and attempt on The Human Race to implement variance shadow maps, actually came in: built a view frustum aligned variance shadow map, but of the CoC in the gathering kernels, and apply this shadow term to the scattered bokeh.

This was used in the Starwars raytracing tech demo. Presented at SIGGRAPH 2018 as A Life of a Bokeh.

This was used in the Starwars raytracing tech demo. Presented at SIGGRAPH 2018 as A Life of a Bokeh.

March 2018: Fortnite Battle Royal Season 3

Solid GPU 60hz thanks to dynamic resolution + TAAU

Fortnite Battle Royal release was starting to get a lot of success among gamers, but the game was still offerring on base PS4 and Xbox One either a 30hz game mode, or uncapped frame rate that couldn't maintain a solid 60hz. Due to the competitive nature of the shooting game, having a solid 60hz mode became a company priority to satisfy these gamers.

March 2018: Fortnite Battle Royal Season 3

Solid GPU 60hz thanks to dynamic resolution + TAAU

Fortnite Battle Royal release was starting to get a lot of success among gamers, but the game was still offerring on base PS4 and Xbox One either a 30hz game mode, or uncapped frame rate that couldn't maintain a solid 60hz. Due to the competitive nature of the shooting game, having a solid 60hz mode became a company priority to satisfy these gamers.

No descriptor reallocation on rendering resolution changes: first because we wanted arbitrary dynamic resolution upscale factors, which meant too many descriptor. Also our explicit render-target pool based renderer was complex, and allocating descriptor up front to avoid CPU hitches was not feasible. PC on D3D11 also did not support transient memory allocation yet, or doing it on the fly on console on the GPU's time-line was simply too costly. Instead we went for static render target size, and simply resize the viewport in them.

No descriptor reallocation on rendering resolution changes: first because we wanted arbitrary dynamic resolution upscale factors, which meant too many descriptor. Also our explicit render-target pool based renderer was complex, and allocating descriptor up front to avoid CPU hitches was not feasible. PC on D3D11 also did not support transient memory allocation yet, or doing it on the fly on console on the GPU's time-line was simply too costly. Instead we went for static render target size, and simply resize the viewport in them.

GDC 2017: The Human Race

On GPU real-time movie-quality compositing pipeline

Yearly tradion at Epic Games in the UE4 years, Kim Libreri, Epic Games CTO pushed another graphic boundary pushing demo. This time it was to innovate in virtual production use cases using a game engine, to do live 24hz in-engine compositing of a quality worthy of his previous experience compositing the Matrix Movies and movies he made after.

GDC 2017: The Human Race

On GPU real-time movie-quality compositing pipeline

Yearly tradion at Epic Games in the UE4 years, Kim Libreri, Epic Games CTO pushed another graphic boundary pushing demo. This time it was to innovate in virtual production use cases using a game engine, to do live 24hz in-engine compositing of a quality worthy of his previous experience compositing the Matrix Movies and movies he made after.

Brian Karis had made and optimised the fully Image-based-Lighting GPU-processing for lighting the car on the GPU with baked spherical-gaussian directional occlusion. He also had implemented a rotational motion-blur for the wheel as a translucent cylinder object arround the wheel sampling the scene color.

Brian Karis had made and optimised the fully Image-based-Lighting GPU-processing for lighting the car on the GPU with baked spherical-gaussian directional occlusion. He also had implemented a rotational motion-blur for the wheel as a translucent cylinder object arround the wheel sampling the scene color.

The compositing pipeline had different configurations selected a on per-shot basis to achieve different compositing goals. The outdoor sequence with video streaming, whether there was virtual softshadow to apply, car entering the tunnel entrance, the tunnel sequence where the car and tunnel were rendered separately for best motion blur quality of the tunnel behind the cars. Sometimes the backplate and alpha channel used for different reasons acoording to the compositing pipeline settings.

The compositing pipeline had different configurations selected a on per-shot basis to achieve different compositing goals. The outdoor sequence with video streaming, whether there was virtual softshadow to apply, car entering the tunnel entrance, the tunnel sequence where the car and tunnel were rendered separately for best motion blur quality of the tunnel behind the cars. Sometimes the backplate and alpha channel used for different reasons acoording to the compositing pipeline settings.